Date:

25/11/2023

Listen to this article:

Key Points:

Microsoft and Tsinghua University's 'Skeleton-of-Thought' (SoT) presents a novel approach to accelerate Large Language Models (LLMs).

SoT improves processing speeds significantly, enhancing AI applications in real-time environments.

In a significant leap for Artificial Intelligence, Microsoft Research and Tsinghua University have introduced an innovative method, the 'Skeleton-of-Thought' (SoT), to speed up Large Language Models (LLMs) like GPT-4 and LLaMA. This advancement addresses a crucial limitation in AI technology: the slow processing speeds of LLMs, which have impeded their application in latency-sensitive environments like chatbots and industrial controllers.

SoT's approach is distinct from traditional methods that involved complex modifications to LLMs. Instead of altering the internal mechanics of these models, SoT focuses on optimizing how their outputs are organized. The process involves a two-stage methodology: initially, the LLM creates a skeletal framework for the response, akin to a high-level outline. In the second stage, this framework is used for the parallel expansion of multiple aspects, enhancing efficiency without the need for significant changes to the model itself.

The efficacy of SoT was demonstrated through rigorous testing on various LLMs, using the Vicuna-80 dataset covering diverse domains like coding and writing. Results showed speed improvements ranging from 1.13x to 2.39x across different models, without compromising the quality of responses. This breakthrough not only addresses the issue of slow processing in LLMs but also opens avenues for more efficient and versatile AI-driven solutions, paving the way for broader applications in real-time, latency-critical environments.

References

- https://multiplatform.ai/sot-microsoft-and-tsinghuas-innovation-to-accelerate-large-language-models/

- https://www.marktechpost.com/2023/11/24/researchers-from-microsoft-research-and-tsinghua-university-proposed-skeleton-of-thought-sot-a-new-artificial-intelligence-approach-to-accelerate-generation-of-llms/

- https://aimarketupdates.com/2023/11/24/researchers-from-microsoft-research-and-tsinghua-university-proposed-skeleton-of-thought-sot-a-new-artificial-intelligence-approach-to-accelerate-generation-of-llms/

About the author

Evalest's tech news is crafted by cutting-edge Artificial Intelligence (AI), meticulously fine-tuned and overseen by our elite tech team. Our summarized news articles stand out for their objectivity and simplicity, making complex tech developments accessible to everyone. With a commitment to accuracy and innovation, our AI captures the pulse of the tech world, delivering insights and updates daily. The expertise and dedication of the Evalest team ensure that the content is genuine, relevant, and forward-thinking.

Related news

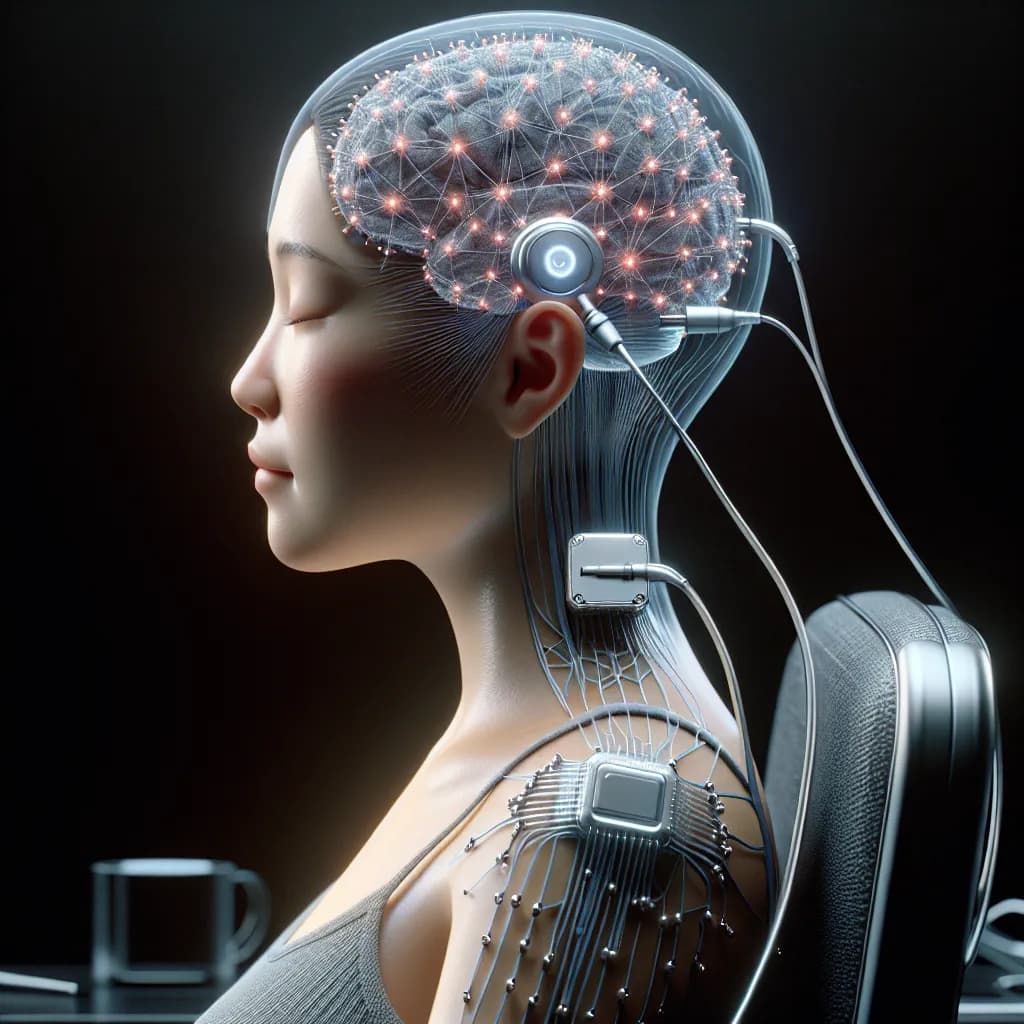

Neuralink Achieves Groundbreaking Success in Human Brain Chip Implant

Neuralink's recent success in implanting a brain-computer interface in a human marks a pivotal moment in neurotechnology.

OpenAI's Sam Altman on AGI: Insights and Future Directions

Exploring Sam Altman's perspective on Artificial General Intelligence (AGI), focusing on AI safety, ethics, global cooperation, GPT advancements, and energy sustainability.

Top 10 Breakthrough Technologies of 2024

Exploring the top ten breakthrough technologies of 2024, from AI advancements to novel health solutions and sustainable energy innovations.